Your Guide to Developing an AI-Driven TPRM program

It is every practitioner’s dream to develop an AI based automation system. It is no exception for the TPRM domain. The need is clear: substantial time saving on manual TPRM activities including collecting data from diverse platforms as well as curating these data.

However, it is not always obvious where to start. Here are the steps for developing an AI-driven TPRM project with helpful tips:

Step 1: Feasibility Study

While an AI project will theoretically bring automation and efficiency, it may not turn out that way in real life. The project could turn out to be much more costly than foreseen, due to accuracy problems, maintenance issues, and more. So a feasibility study will be a great tool before starting to plan the development or look for vendors.

Issues to consider in this phase are:

Objectives and Scope

- Identify the primary needs of the TPRM project, whether it is just about automating the current manual process or adding new features as well.

Stakeholder Analysis

- Identify all stakeholders, your third parties as well as internal departments (e.g., IT, Legal, Compliance)

- Assess their needs, expectations, and concerns, such as:

- Data sharing

- AI safety & security

- Technology readiness

Current State Assessment

- Evaluate the existing third-party management processes and systems:

- Number of experts allocated

- Expert hours invested

- Identify any gaps and inefficiencies in the current (manual) setup

Market Research and Benchmarking

- Conduct market research for AI-driven TPRM programs

- Research vendors, tools, etc.

- Benchmark against similar organizations to understand how they AI-automate third-party risks (if they are willing to share)

Regulatory and Compliance Consideration

- Review relevant regulations and compliance requirements for safe & ethical AI

- Ensure the technology will meet TRPM requirements with a minimum accuracy

Step 2: Planning

After the feasibility study has turned out to yield positive results, the planning phase comes next. OneSome key aspects that should be considered in planning an AI- driven TPRM project isare- whether it is an outsourced project, or partially outsourced. When the project is outsourced, it is important to beware of false AI claims.

Issues to consider in this phase are:

- Convert customer expectation to technical requirements

- Set KPIs such as accuracy, speed, cost

- What AI & automation features are needed

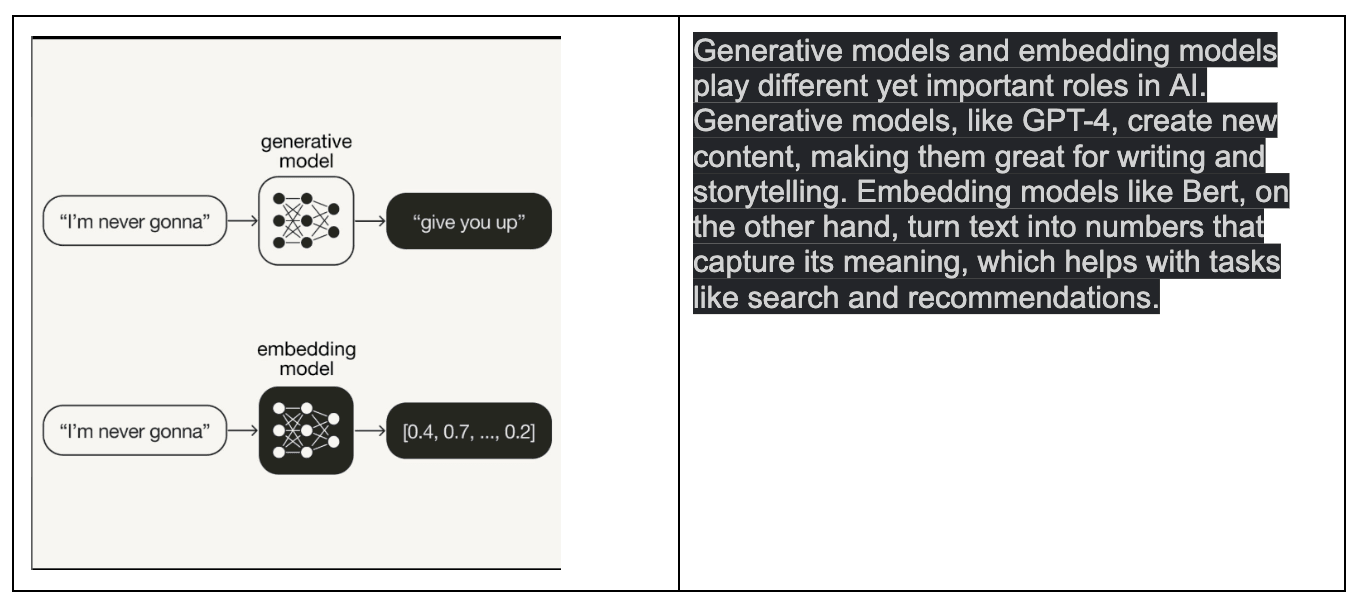

- GenAI, embedding models, etc.

- Other than AI, what tools & services needed for document and questionnaire automation

- Outsourcing decisions

- Are there enough in-house ML/AI engineers for development

- Cost of the tools, software

- Maintenance of the system

- Skills and personnel needed to run the system

- Risk Analysis

- The most obvious risk in an AI project is quick outdated software, which reflects the dynamics of the AI landscape.

Step 3: Development / PoC

This stage is either development or PoC of the selected tools/ software. If you want to develop your AI driven TPRM program in-house:

- Decide on the actual LLM/GenAI framework: There are many open-source models out there such as Mistral, LLAMA, as well as proprietary LLms such asOpenAI, Gemini, etc.

- Data Methodology: RAG or fine-tuning: RAG stands for Retrieval-Augmented Generation. It is a technique in natural language processing (NLP) where a generative model is enhanced by incorporating external information retrieved from a database or a document store. This approach improves the relevance and accuracy of the generated responses by grounding them in factual data which decreases the hallucination. When assessing a new vendor, a RAG system can retrieve relevant data from past audit reports, compliance documents, legal records, and performance metrics stored in the company’s database.

- AI Governance

- Whether selecting an AI-powered product or developing a custom solution, organizations must ensure that these technologies are governed effectively to mitigate risks and maximize benefits. Some key concerns about AI governance are: Ensuring the AI system provides clear explanations for its risk assessments and decisions, how the AI product handles sensitive data, ethical use, and how the model drift or performance degradation over time is handled.

Step 4: Execution

The execution should always record performance and accuracy during production to make sure the designated system works as planned.

- Review performance metrics as needed during execution.

- Monitor results by either in-house experts, employing human labelers or direct customer feedback.

You can use various benchmarks tailored to different types of documents to assess the model’s performance. There are many benchmarks released every week on several LLM leaderboards. Hugging Face is such a platform that hosts many LLM benchmarks. If your project involves fine-tuning a model, you can always evaluate its performance against these well-known models. This comprehensive comparison helps to understand where your model excels and where there is room for improvement, ensuring that your results are robust and reliable.

Step 5: Updates

- Updates to GenAI service

It’s an ongoing process to ensure that GenAI services are continuously updated and improved. Regularly refining and enhancing your AI services is crucial to maintaining their effectiveness and keeping up with advancements in the field. In the fast-paced AI landscape of 2024, falling behind can happen quickly if you don’t stay current. Therefore, the product you choose must be adaptable and capable of evolving with the industry trends

Step 6: The Future of Generative AI in TPRM

Despite these challenges, the future of AI in TPRM is promising. Continuous advancements in technology are making AI models more efficient and accurate. Fine-tuning models for specific applications, as demonstrated in TPRM, will become increasingly important, allowing for more tailored and effective risk management solutions.

As the technology matures, one can expect more robust frameworks and guidelines to address the ethical and legal challenges associated with generative AI. Collaborative efforts between technologists, ethicists, and policymakers will be crucial in shaping these frameworks, ensuring that the benefits of generative AI are harnessed responsibly.

To learn more about AI in TPRM, read our research article, “Artificial Intelligence in TPRM, Volume 2: The NLP Engineer’s Guide to Building a Domain-Aware AI.”